Generating a sitemap for a web app can be trivial (i.e. using free online tools such as xml-sitemaps.com). Things get a bit more complicated when the content we need to index in the sitemap is generated by the users of the web app. Here is how to efficiently build and maintain a dynamic sitemap.

The first intuitive option that might come to mind is to have an endpoint that generates the dynamic sitemap on demand. This might work for small applications, but it becomes too slow when the amount of URLs to index grows beyond a certain limit. Additionally we are consuming database resources that will either drain the web app or generate additional costs.

The sitemap is meant to be an XML and there is nothing wrong with that. The only particularity about the sitemap containing dynamic content is that we will need to periodically update it. And, because we want to do that without having to deploy new code, we will want that file not to be part of the web app's static assets.

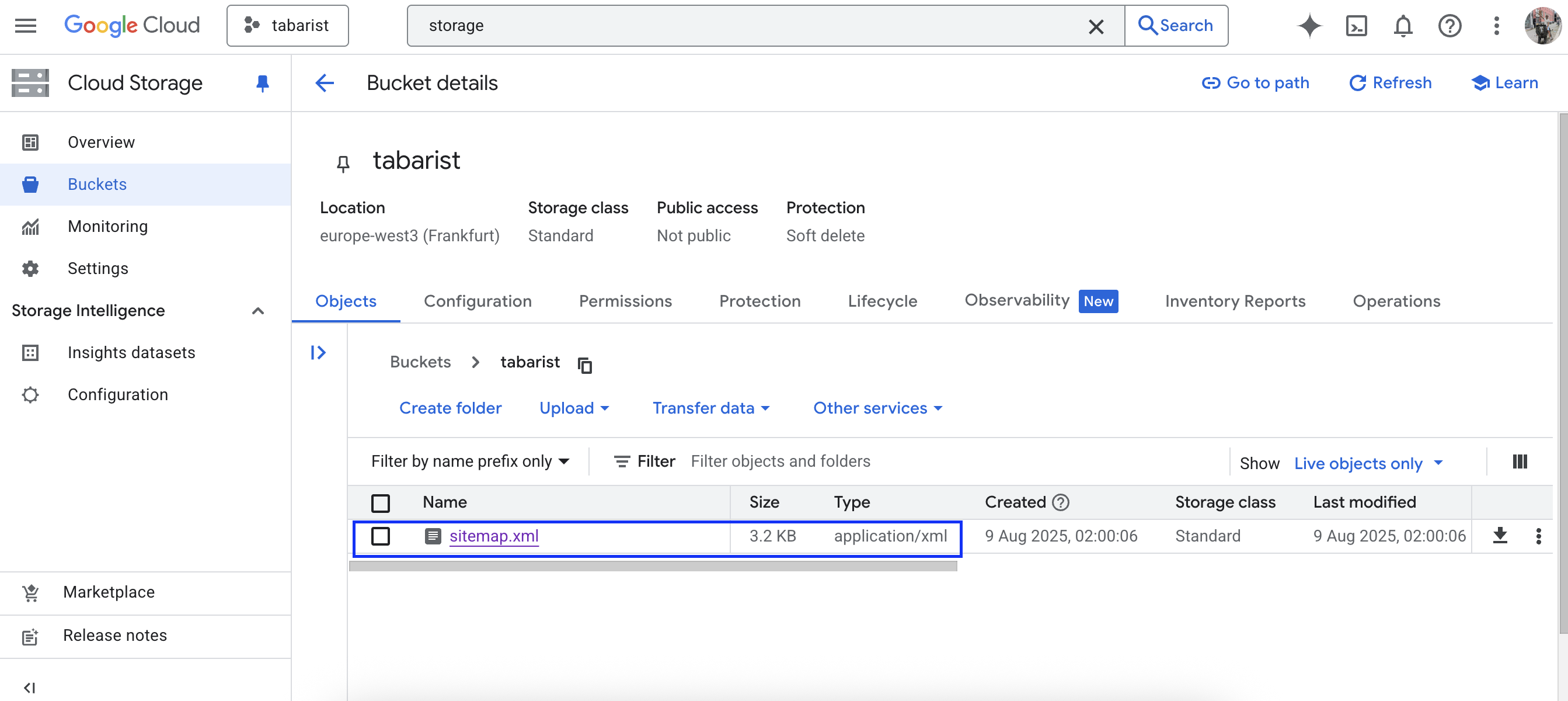

A good place to store a file that our web app wants to read and modify is a cloud storage bucket (e.g. GCP buckets or S3 buckets). We can either create the file manually or support the scenario when the file is not present via code. Here I'm opting for the first option. Once created, we will read such file every time we need to serve the sitemap (via a /sitemap.xml endpoint) and return its contents. Here is an example using firebase-admin and firebase-functions.

The challenging part in this approach is deciding when and how to update the file contents. It can be tempting to update the file in real time every time users create or delete a resource, so the sitemap is always up-to-date. Chances are however that users will create content concurrently and we don't want to risk parallel requests accessing the file and overwriting the contents at the same time. It would be more cautious to allow the sitemap to go a few hours out-of-date and to update it periodically via a scheduled job.

Another consideration is whether the job should recompute the entire file content or make incremental updates to the existing file content. While the first option sounds simpler it will also consume more resources. If we are using a cloud-hosted database (e.g. Firestore) this will substantially increase the costs of running the web app (see this Stack Overflow post). Incremental updates to the file are a bit more tricky but they will pay off. In essence we need to:

- Flag new resources after they are created so the job can identify the new URLs that must be added to the sitemap.

- Track resource deletions so the job can identify the URLs that must be removed from the sitemap.

The job will then obtain the list of URLs that must be added/removed, read the contents of the file, update it with the corresponding URL changes and write the file back to the storage bucket. The implementation will depend largely on the server architecture. Here is an example using Firestore and Firebase functions assuming that:

- Each resource contains a property (i.e. created) with its creation timestamp. To find out which resources need to be added to the sitemap we will filter on that property for values between now and the last time the job run.

- We are using the onDocumentDeleted Firebase trigger to add the corresponding document ID to another Firestore collection (i.e. sitemapDeletions) every time a resource is deleted.

- The URLs can be built from each resource ID without any logic. If we wanted to support different types of dynamic URLs, we would have to store additional metadata in the sitemapDeletions collection.

- We have two functions (i.e. getNewResourceIds and getDeletedResourceIds) that retrieve the complete list of IDs that must be added/removed to the sitemap. Such functions crawl the Firestore collections and build a plain list of strings.

Similarly to the sitemapDeletions collection, we could define a sitemapAdditions collection and use the onDocumentCreated trigger to add URLs every time a new resource is created. Note however that this would mean additional write operations to remove the IDs from the collection after the job has updated the file. Symmetric approach but higher costs.

Once deployed the job will run every midnight, looking for changes in resources and updating the sitemap accordingly when needed. Happy coding!